[Trigger Warning: this post mentions suicide. The National Suicide Prevention Lifeline is 1-800-273-8255]

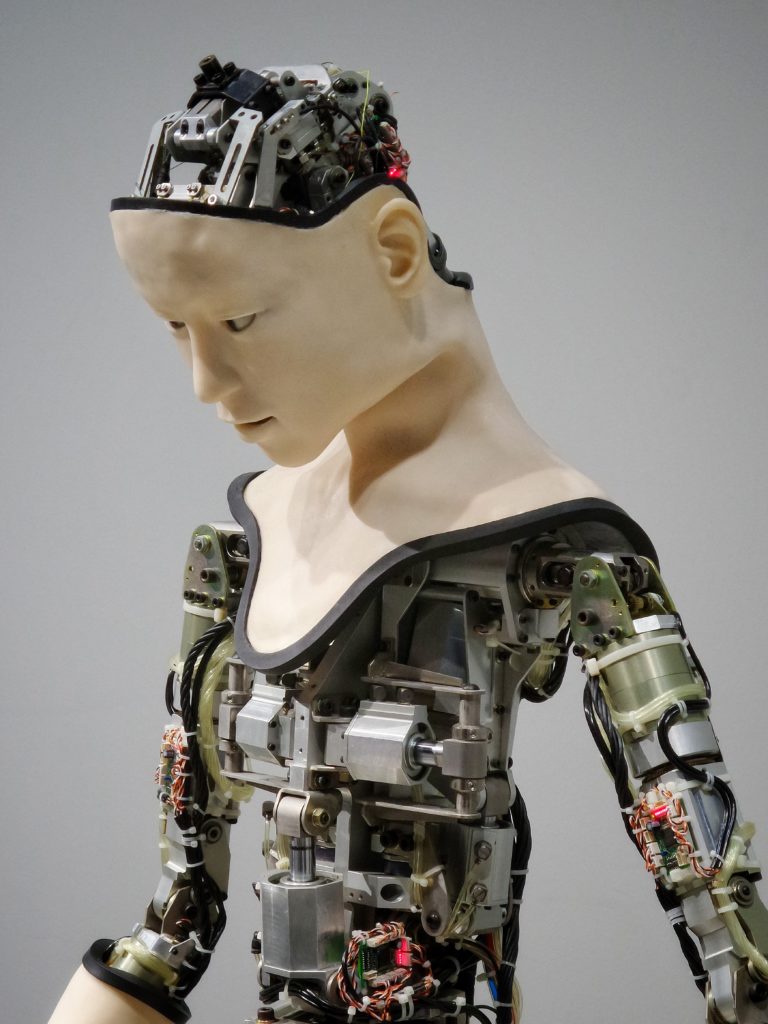

Photo by Possessed Photography on Unsplash

The Myers* family was devastated. Their teenage son Herbie died by suicide. He left no note, but a good look at his computer told the story. Herbie had visited a website that was filled with hateful messages about gays. While Herbie’s parents had never talked to him directly about his sexuality, they understood and accepted that he was gay. What they didn’t understand was why the website that Herbie visited wasn’t blocked. (If you are in distress, the National Suicide Prevention Lifeline is 1-800-273-8255.)

The Myers had purchased filtering software for Herbie’s computer. The software was supposed to block any site related with violent, hateful, or sexual content. The company claimed their software had helped prevent sixteen school shootings. Obviously, something was wrong with the software.

As an experiment, the Myers did searches for certain words to see what sites were returned. When they used the term LGBTQ, nothing was returned. However, “white rights” returned a number of sites, all filled with hate. They searched for sites that provided counseling for sexuality. They were blocked.

They decided to investigate the web filter software. What they learned was that the algorithms used to filter undesirable material were easy to get around. For example, racist or Neo-Nazi groups used phrases like “new group to join” to attract young followers. They used language and memes that avoided explicit hate speech, but where the hateful intent was easy to understand.

While undesirable racist sites were accessible, legitimate sites relating to LGBTQ issues were filtered out. The Myers discovered that there were a number of useful sites that helped young people understand their sexuality were all blocked as being pornographic. The legitimate sites have not been able to manage their way around the filtering algorithms.

The federal Children’s Internet Protection Act requires that public schools and libraries use web filtering. The Act was upheld by the Supreme Court, but the Court gave little guidance for how the filtering was to be done. Most public institutions rely on contracts with software companies. The courts have ruled that filters cannot be used to purposefully block access to protected content such as LGBTQ health information. But the courts haven’t ruled whether algorithms fall into this provision since it is virtually impossible to prove human intent behind the software’s construction.

What are the risks we run from the algorithmic filtering of information that children encounter online? How can we help parents be aware of the role of these algorithms—and of the gaps in filtering that could actually harm children? Just imagine the impact that “algorithmic parenting” can have on a child’s view of themselves, of our society, or of our democracy.

The filtering software used by the Myers didn’t seem to have a negative intent. But just imagine if it did. Just imagine how the developers of filtering algorithms could purposefully distort information provided to children—or to all of us. Just imagine what it would take to help children turn to their parents for sensitive information and critical conversations, rather than to an internet that has been filtered by a computer algorithm. What should be the role of algorithmic parenting?

* * *

“Censorship, like charity, should begin at home, but, unlike charity, it should end there.” – Clare Boothe Luce (Former U.S. Ambassador)

*This post is based on composites of real-world situations, with names and details changed for the sake of anonymity. Some references are here. This post is part of our “Just Imagine” series of occasional posts, inviting you to join us in imagining positive possibilities for a citizen-centered democracy.