Image by Tumisu from Pixabay

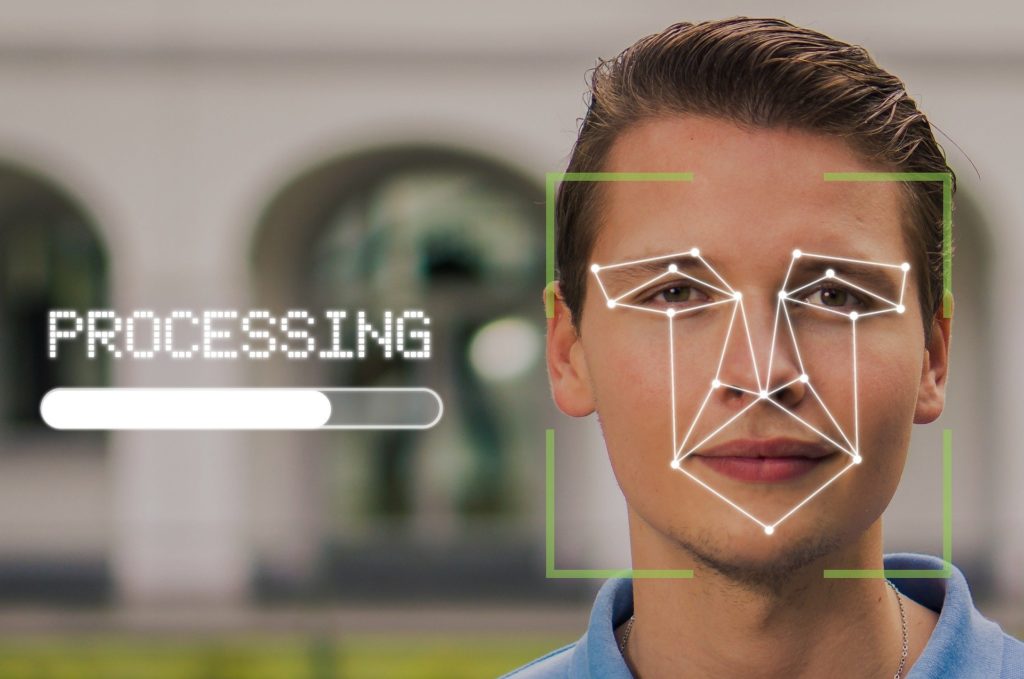

When domestic terrorists attacked the U.S. Capitol on January 6, 2021, the FBI was able to identify many of the terrorists using facial recognition technology (FRT). Artificial intelligence algorithms can match facial features from photos and videos of the riot to facial features from photos and videos posted on social media or provided from other sources. Most people might agree that in this case, technology is providing a valuable support for the preservation of our democracy.

In another case, FRT was used to track down a protestor in a Black Lives Matters protest in New York City. The police arrested the protestor for shouting at a police officer using a bullhorn, allegedly causing hearing loss (the protestor maintains he was at least six feet away from the officer). There is some dispute whether the police had a legitimate right to go after the protestor, but there is no dispute that the police violated their own principles, specifically that FRT should only use photos from prior arrests in identifying a suspect. Apparently, the police used images culled from social media. What does this use of FRT mean for our First Amendment right to peacefully assemble or our Fourth Amendment right to be free from “unreasonable searches and seizures.” If our images are constantly being vacuumed up for FRT, how does this impact our status as a free society? As citizens of a democracy, what concerns do we have about government surveillance—including of our social media posts?

Like any technology based upon artificial intelligence, facial recognition technology builds in all the demographic biases of its human developers, along with the prevailing inequalities of our society. It has been well documented that FRT does the best job of recognizing white male faces, with high error rates for women and people of color. Given the high stakes for misidentification, these error rates are deeply concerning. Further compounding the situation, arrest photos are a key data source for facial imagery. We know that people of color are arrested at far higher rates than Whites for the same infractions, so their images will be overrepresented in the databases, amplifying the already higher likelihood of false positive matches. Such false positives can create a technologically enhanced vicious cycle, with growing numbers of mistaken identities leading to even more policing actions against Blacks and other racial minorities, which will contribute to the overrepresentation in the databases, and so on.

Even when there are known flaws, technological innovations can quietly gain unquestioned acceptance. Many of us are familiar with the notion of the “technological imperative,” which essentially says that because we can do something, then we should do it. Once we have a technological capacity, it’s very hard not to use it. The old saying is, “to a person with a hammer, everything looks like a nail.” But of course, we shouldn’t go around hammering everything. We should question the technological imperative of using facial recognition technology. So, how should we as democratic citizens weigh the potential risks and benefits of facial recognition technology?

Technology is rapidly advancing, while our democracy has taken centuries to evolve. Changes in law enforcement and antiterrorism practices can happen quickly and in response to urgent situations. But democracy is slower. As a democratic society, how should we govern the use of this technology? And more generally, how should we govern any technology that could fundamentally alter core aspects of our democratic society, such as our foundational notions of privacy, freedom, and equality? What if we find that this technology poses unacceptable challenges to our democracy?

Just imagine how we might develop a faster response system that protects our democracy from technological advances. What might that look like? What if we had an approval agency for new technologies, just as we have the FDA for new drugs? Who should be involved in making these decisions? How can citizens have a voice in this process, to counterbalance the alluring power of technological advances and the outsized role of technical expertise that might simply be following the latest technological imperative?

Interested in exploring questions such as these in a collaborative way? Please join us for our upcoming free 3-part conversation series, What IF…? Conversations about Technology and Democracy, scheduled for June 24, July 1, and July 8 (7:00-8:30 pm EDT). Each session is distinct, so register for as many as you would like to join.

* * *

“The combination of hatred and technology is the greatest danger threatening mankind.” – Simon Wiesenthal (Holocaust survivor, Nazi hunter and writer)

This is part of our “Just Imagine” series of occasional posts, inviting you to join us in imagining positive possibilities for a citizen-centered democracy.